Amazon Data Firehose

| Version | 1.9.0 (View all) |

| Subscription level What's this? |

Basic |

| Developed by What's this? |

Elastic |

| Minimum Kibana version(s) | 9.0.0 8.13.0 |

Amazon Data Firehose integration offers users a way to stream logs and CloudWatch metrics from Firehose to Elastic Cloud. This integration includes predefined rules that automatically route AWS service logs and CloudWatch metrics to the respective integrations, which include field mappings, ingest pipelines, and predefined dashboards.

Here is a list of log types that are supported by this integration:

| AWS service log | Log destination |

|---|---|

| API Gateway | CloudWatch |

| CloudFront | S3 |

| CloudTrail | CloudWatch |

| ELB | S3 |

| Network Firewall | Firehose, CloudWatch, S3 |

| Route53 Public DNS | CloudWatch |

| Route53 Resolver | Firehose, CloudWatch, S3 |

| S3 access | S3 |

| VPC Flow | Firehose, CloudWatch, S3 |

| WAF | Firehose, CloudWatch. S3 |

Here is a list of CloudWatch metrics that are supported by this integration:

| AWS service monitoring metrics |

|---|

| API Gateway |

| DynamoDB |

| EBS |

| EC2 |

| ECS |

| ELB |

| EMR |

| Network Firewall |

| Kafka |

| Kinesis |

| Lambda |

| NATGateway |

| RDS |

| S3 |

| S3 Storage Lens |

| SNS |

| SQS |

| TransitGateway |

| Usage |

| VPN |

It is not possible to configure a delivery stream to send data to Elastic Cloud via PrivateLink (VPC endpoint). This is a current limitation in Firehose, which we are working with AWS to resolve.

Install the relevant integrations in Kibana

To effectively utilize your data, you need to install the necessary integrations. Log in to Kibana, navigate to Management > Integrations in the sidebar.

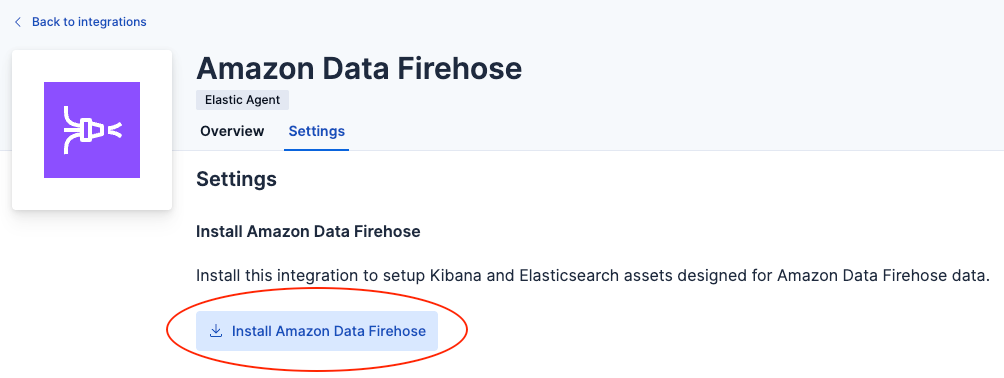

- First Install Amazon Data Firehose Integration assets. Assets installed through this integration will receive and route your Firehose data to various backend datasets. Find it by searching or browse the catalog.

Navigate to the Settings tab and click Install Amazon Data Firehose. Confirm by clicking Install Amazon Data Firehose in the popup.

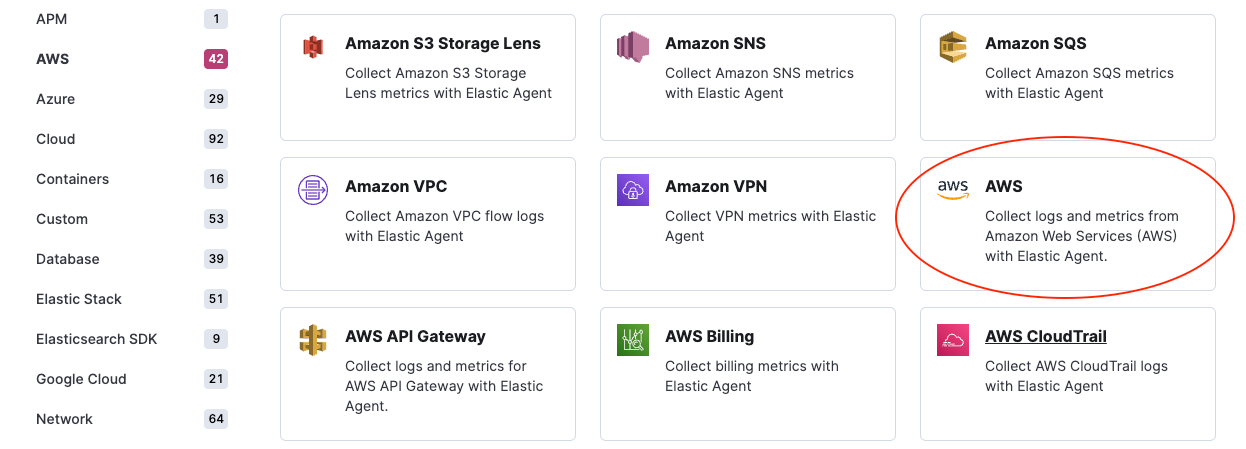

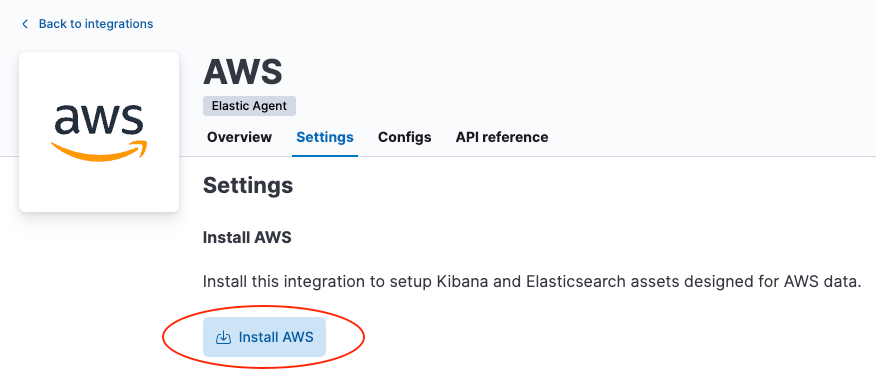

- Then install the AWS integration assets. This integration provides assets such as index templates, ingest pipelines, and dashboards that will serve as the destination points for the data routed by the Firehose Integration. Find the AWS integration by searching or browsing the catalog.

Navigate to the Settings tab and click Install AWS assets. Confirm by clicking Install AWS in the popup.

Create a delivery stream in Amazon Data Firehose

Sign into the AWS console and navigate to Amazon Data Firehose. Click Create Firehose stream. Configure the delivery stream using the following settings:

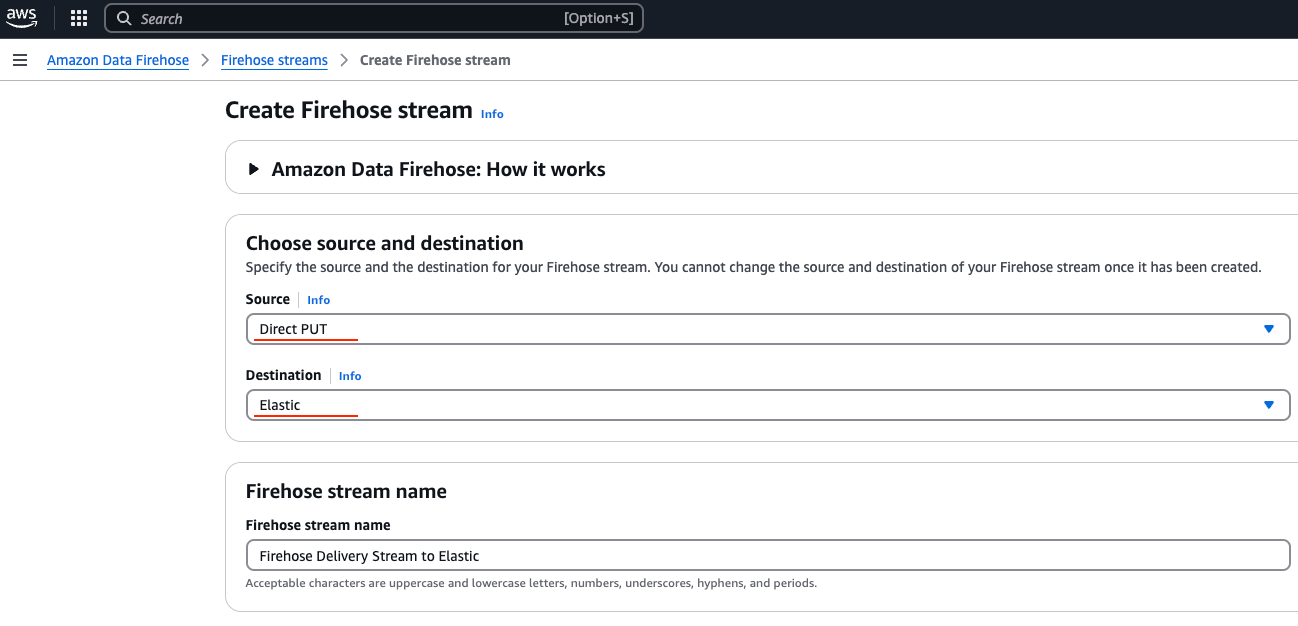

Choose source and destination

Unless you are streaming data from Kinesis Data Streams, set source to Direct PUT (see Setup guide for more details on data sources).

Set destination to Elastic.

Delivery stream name

Provide a meaningful name that will allow you to identify this delivery stream later.

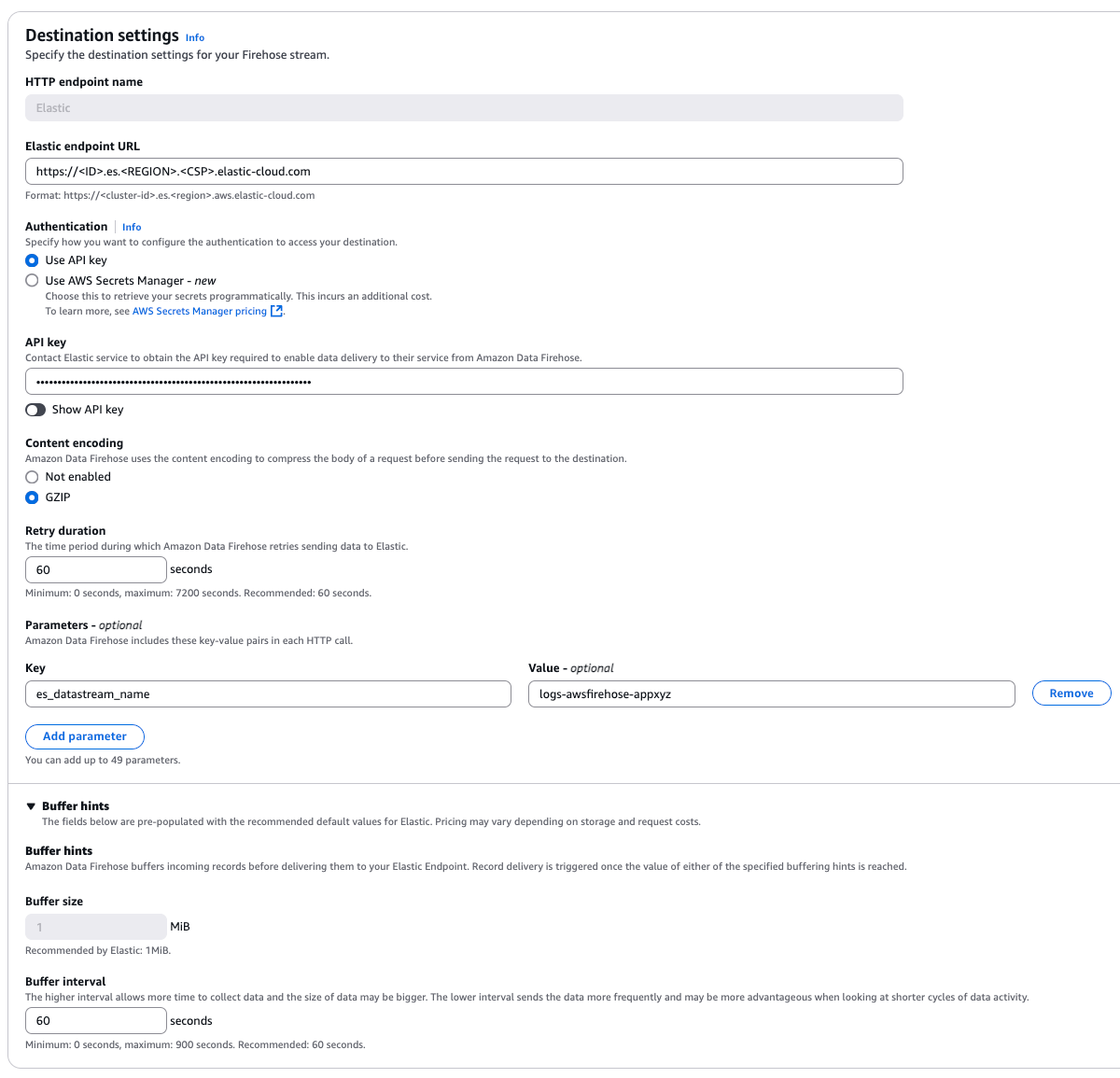

Destination settings

Set Elastic endpoint URL to point to your Elasticsearch cluster running in Elastic Cloud. This endpoint can be found from the Elastic Cloud console.

API key is the base64 encoded Elastic API key. This can be created in Kibana by following the instructions under API Keys. If you are using an API key with Restricted privileges, be sure to review the Indices privileges to provide at least "auto_configure" & "write" permissions for the indices you will be using with this delivery stream. By default, logs will be stored in

logs-awsfirehose-defaultdata stream and metrics will be stored inmetrics-aws.cloudwatch-defaultdata stream. Therefore, Elastic highly recommends givinglogs-awsfirehose-defaultandmetrics-aws.cloudwatch-defaultindices with "write" privilege.We recommend setting Content encoding to GZIP to reduce the data transfer costs.

Retry duration determines how long Firehose continues retrying the request in the event of an error. A duration between 60 and 300 seconds should be suitable for most use cases.

Elastic requires a Buffer size of

1MiBto avoid exceeding the Elasticsearchhttp.max_content_lengthsetting (typically 100MB) when the buffer is uncompressed.The default Buffer interval of

60sis recommended to ensure data freshness in Elastic.Parameters

es_datastream_name: Accepts a data stream name of your choice. You can configure this when you ingest data formats that are not officially supported and managed through your customized data streams. Also, you may use this to separate your ingested data from default indexes. By default, logs will be stored in indexes oflogs-awsfirehose-default(index templatelogs-awsfirehose) data stream and metrics will be stored in indexes ofmetrics-aws.cloudwatch-default(index templatemetrics-aws.cloudwatch) data stream. When configuring, please make sure configured API key has required permissions to ingest data into the configured data stream.include_cw_extracted_fields: Optional parameter that can be set when using a CloudWatch logs subscription filter as the Firehose data source. When set to true, extracted fields generated by the filter pattern in the subscription filter will be collected. Setting this parameter can add many fields into each record and may significantly increase data volume in Elasticsearch. As such, use of this parameter should be carefully considered and used only when the extracted fields are required for specific filtering and/or aggregation.

Backup settings It is recommended to configure S3 backup for failed records. These backups can be used to restore lost data caused by unforeseen service outages.

Send data to the Firehose delivery stream

logs Consult the AWS documentation for details on how to configure a variety of log sources to send data to Firehose delivery streams.

metrics Consult the AWS documentation for details on how to set up a metric stream in CloudWatch and Custom setup with Firehose to send metrics to Firehose. For Elastic, we only support JSON and OpenTelemetry 1.0.0 formats for the metrics.

Exported fields

| Field | Description | Type |

|---|---|---|

| @timestamp | Date/time when the event originated. This is the date/time extracted from the event, typically representing when the event was generated by the source. If the event source has no original timestamp, this value is typically populated by the first time the event was received by the pipeline. Required field for all events. | date |

| aws.cloudwatch.log_group | CloudWatch log group name. | keyword |

| aws.cloudwatch.log_stream | CloudWatch log stream name. | keyword |

| aws.firehose.arn | Firehose ARN. | keyword |

| aws.firehose.parameters | Key-value pairs users set up when creating the Kinesis Data Firehose. These parameters are included in each HTTP call. | flattened |

| aws.firehose.request_id | Firehose request ID. | keyword |

| aws.firehose.subscription_filters | Firehose request ID. | keyword |

| aws.kinesis.name | Kinesis name. | keyword |

| aws.kinesis.type | Kinesis type. | keyword |

| data_stream.dataset | The field can contain anything that makes sense to signify the source of the data. Examples include nginx.access, prometheus, endpoint etc. For data streams that otherwise fit, but that do not have dataset set we use the value "generic" for the dataset value. event.dataset should have the same value as data_stream.dataset. Beyond the Elasticsearch data stream naming criteria noted above, the dataset value has additional restrictions: * Must not contain - * No longer than 100 characters |

constant_keyword |

| data_stream.namespace | A user defined namespace. Namespaces are useful to allow grouping of data. Many users already organize their indices this way, and the data stream naming scheme now provides this best practice as a default. Many users will populate this field with default. If no value is used, it falls back to default. Beyond the Elasticsearch index naming criteria noted above, namespace value has the additional restrictions: * Must not contain - * No longer than 100 characters |

constant_keyword |

| data_stream.type | An overarching type for the data stream. Currently allowed values are "logs" and "metrics". We expect to also add "traces" and "synthetics" in the near future. | constant_keyword |

Exported fields

| Field | Description | Type | Metric Type |

|---|---|---|---|

| @timestamp | Date/time when the event originated. This is the date/time extracted from the event, typically representing when the event was generated by the source. If the event source has no original timestamp, this value is typically populated by the first time the event was received by the pipeline. Required field for all events. | date | |

| aws.*.metrics.*.* | Metrics that returned from Cloudwatch API query. | object | gauge |

| aws.cloudwatch.namespace | The namespace specified when query cloudwatch api. | keyword | |

| aws.dimensions.* | Metric dimensions. | keyword | |

| aws.exporter.arn | The metric stream Amazon Resource Name (ARN). | keyword | |

| aws.firehose.arn | Amazon Resource Name (ARN) for the firehose stream. | keyword | |

| aws.firehose.parameters | Key-value pairs users set up when creating the Kinesis Data Firehose. These parameters are included in each HTTP call. | flattened | |

| aws.firehose.request_id | HTTP request opaque GUID. | keyword | |

| aws.metrics_names_fingerprint | Autogenerated ID representing the fingerprint of the list of metrics names. For metrics coming in from Firehose, there can be cases two documents have the same timestamp, dimension, namespace, accountID, exportARN and region BUT from two different requests. With TSDB enabled, we will see documents missing if without aws.metrics_names_fingerprint field. | keyword | |

| cloud.account.id | The cloud account or organization id used to identify different entities in a multi-tenant environment. Examples: AWS account id, Google Cloud ORG Id, or other unique identifier. | keyword | |

| cloud.region | Region in which this host, resource, or service is located. | keyword | |

| data_stream.dataset | The field can contain anything that makes sense to signify the source of the data. Examples include nginx.access, prometheus, endpoint etc. For data streams that otherwise fit, but that do not have dataset set we use the value "generic" for the dataset value. event.dataset should have the same value as data_stream.dataset. Beyond the Elasticsearch data stream naming criteria noted above, the dataset value has additional restrictions: * Must not contain - * No longer than 100 characters |

constant_keyword | |

| data_stream.namespace | A user defined namespace. Namespaces are useful to allow grouping of data. Many users already organize their indices this way, and the data stream naming scheme now provides this best practice as a default. Many users will populate this field with default. If no value is used, it falls back to default. Beyond the Elasticsearch index naming criteria noted above, namespace value has the additional restrictions: * Must not contain - * No longer than 100 characters |

constant_keyword | |

| data_stream.type | An overarching type for the data stream. Currently allowed values are "logs" and "metrics". We expect to also add "traces" and "synthetics" in the near future. | constant_keyword | |

| start_timestamp | Date/time when the monitoring cycle started. | date |

Changelog

| Version | Details | Minimum Kibana version |

|---|---|---|

| 1.9.0 | Enhancement (View pull request) Improve access log classification and improve documentation |

9.0.0 8.13.0 |

| 1.8.2 | Bug fix (View pull request) Remove regex from ingest pipeline |

9.0.0 8.13.0 |

| 1.8.1 | Bug fix (View pull request) Update correct link for pull request |

9.0.0 8.13.0 |

| 1.8.0 | Enhancement (View pull request) Updates the ingest pipeline to use a more efficient script for setting event.dataset matching |

9.0.0 8.13.0 |

| 1.7.0 | Enhancement (View pull request) set event.dataset matching the routed target dataset for ingesting records |

9.0.0 8.13.0 |

| 1.6.0 | Enhancement (View pull request) Add support for AmazonMQ metrics for both managed RabbitMQ and ActiveMQ. |

9.0.0 8.13.0 |

| 1.5.2 | Bug fix (View pull request) Avoid using dynamic template for flattened fields |

9.0.0 8.13.0 |

| 1.5.1 | Enhancement (View pull request) Improve documentation. |

9.0.0 8.13.0 |

| 1.5.0 | Enhancement (View pull request) Add support for Kibana 9.0.0 |

9.0.0 8.13.0 |

| 1.4.0 | Enhancement (View pull request) Add support for Amazon Bedrock guardrails metrics. |

8.13.0 |

| 1.3.2 | Enhancement (View pull request) Add missing category. |

8.13.0 |

| 1.3.1 | Enhancement (View pull request) Update documentation about restrict API key permissions |

8.13.0 |

| 1.3.0 | Bug fix (View pull request) Add aws.firehose.arn and aws.firehose.request_id fields. Enhancement (View pull request) Add aws.metrics_names_fingerprint to cloudwatch metrics. |

8.13.0 |

| 1.2.0 | Enhancement (View pull request) Add AWS Bedrock runtime metrics to Firehose rerouting. |

8.13.0 |

| 1.1.0 | Enhancement (View pull request) Add routing rules for metrics from Firehose. |

8.13.0 |

| 1.0.0 | Enhancement (View pull request) Release package as GA. |

8.10.1 |

| 0.6.0 | Enhancement (View pull request) Rename Amazon Kinesis Data Firehose to Amazon Data Firehose |

— |

| 0.5.0 | Enhancement (View pull request) Update the package format_version to 3.0.0. |

— |

| 0.4.0 | Enhancement (View pull request) Add routing rules for cloudfront log, elb log and s3access log. |

— |

| 0.3.0 | Enhancement (View pull request) remove redudant .logs suffix from dataset name |

— |

| 0.2.1 | Enhancement (View pull request) Route WAF logs based on log format |

— |

| 0.2.0 | Enhancement (View pull request) Add support for routing api gateway logs |

— |

| 0.1.0 | Enhancement (View pull request) initial release |

— |