Integrate with third-party services

Elastic Stack Serverless

Elasticsearch provides a machine learning inference API to create and manage inference endpoints to integrate with machine learning models provide by popular third-party services like Amazon Bedrock, Anthropic, Azure AI Studio, Cohere, Google AI, Mistral, OpenAI, Hugging Face, and more.

Learn how to integrate with specific services in the subpages of this section.

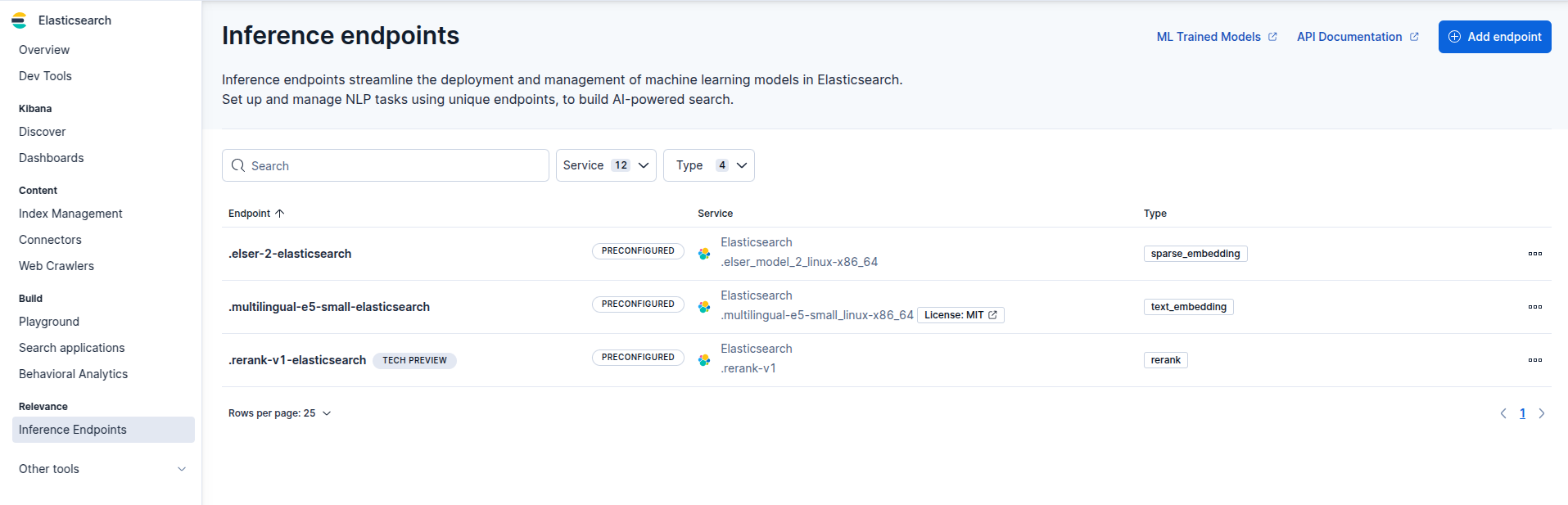

You can also manage inference endpoints using the UI.

The Inference endpoints page provides an interface for managing inference endpoints.

Available actions:

- Add new endpoint

- View endpoint details

- Copy the inference endpoint ID

- Delete endpoints

To add a new interference endpoint using the UI:

- Select the Add endpoint button.

- Select a service from the drop down menu.

- Provide the required configuration details.

- Select Save to create the endpoint.