Heartbeat quick start: installation and configuration

This guide describes how to get started quickly collecting uptime data about your hosts. You’ll learn how to:

- install Heartbeat

- specify the protocols to monitor

- send uptime data to {es}

- visualize the uptime data in {kib}

You need Elasticsearch for storing and searching your data, and Kibana for visualizing and managing it.

To get started quickly, spin up a deployment of our hosted Elasticsearch Service. The Elasticsearch Service is available on AWS, GCP, and Azure. Try it out for free.

To install and run Elasticsearch and Kibana, see Installing the Elastic Stack.

Unlike most Beats, which you install on edge nodes, you typically install Heartbeat as part of a monitoring service that runs on a separate machine and possibly even outside of the network where the services that you want to monitor are running.

To download and install Heartbeat, use the commands that work with your system:

Version 9.0.0-beta1 of Heartbeat has not yet been released.

Version 9.0.0-beta1 of Heartbeat has not yet been released.

Version 9.0.0-beta1 of Heartbeat has not yet been released.

Version 9.0.0-beta1 of Heartbeat has not yet been released.

Version 9.0.0-beta1 of Heartbeat has not yet been released.

The commands shown are for AMD platforms, but ARM packages are also available. Refer to the download page for the full list of available packages.

Connections to Elasticsearch and Kibana are required to set up Heartbeat.

Set the connection information in heartbeat.yml. To locate this configuration file, see Directory layout.

Specify the cloud.id of your Elasticsearch Service, and set cloud.auth to a user who is authorized to set up Heartbeat. For example:

cloud.id: "staging:dXMtZWFzdC0xLmF3cy5mb3VuZC5pbyRjZWM2ZjI2MWE3NGJmMjRjZTMzYmI4ODExYjg0Mjk0ZiRjNmMyY2E2ZDA0MjI0OWFmMGNjN2Q3YTllOTYyNTc0Mw=="

cloud.auth: "heartbeat_setup:{pwd}" 1

- This examples shows a hard-coded password, but you should store sensitive values in the secrets keystore.

Set the host and port where Heartbeat can find the Elasticsearch installation, and set the username and password of a user who is authorized to set up Heartbeat. For example:

output.elasticsearch: hosts: ["https://myEShost:9200"] username: "heartbeat_internal" password: "{pwd}" 1 ssl: enabled: true ca_trusted_fingerprint: "b9a10bbe64ee9826abeda6546fc988c8bf798b41957c33d05db736716513dc9c" 2- This example shows a hard-coded password, but you should store sensitive values in the secrets keystore.

- This example shows a hard-coded fingerprint, but you should store sensitive values in the secrets keystore. The fingerprint is a HEX encoded SHA-256 of a CA certificate, when you start Elasticsearch for the first time, security features such as network encryption (TLS) for Elasticsearch are enabled by default. If you are using the self-signed certificate generated by Elasticsearch when it is started for the first time, you will need to add its fingerprint here. The fingerprint is printed on Elasticsearch start up logs, or you can refer to connect clients to Elasticsearch documentation for other options on retrieving it. If you are providing your own SSL certificate to Elasticsearch refer to Heartbeat documentation on how to setup SSL.

If you plan to use our pre-built Kibana dashboards, configure the Kibana endpoint. Skip this step if Kibana is running on the same host as Elasticsearch.

setup.kibana: host: "mykibanahost:5601" 1 username: "my_kibana_user" <2> 23 password: "{pwd}"- The hostname and port of the machine where Kibana is running, for example,

mykibanahost:5601. If you specify a path after the port number, include the scheme and port:http://mykibanahost:5601/path. - The

usernameandpasswordsettings for Kibana are optional. If you don’t specify credentials for Kibana, Heartbeat uses theusernameandpasswordspecified for the Elasticsearch output. - To use the pre-built Kibana dashboards, this user must be authorized to view dashboards or have the

kibana_adminbuilt-in role.

- The hostname and port of the machine where Kibana is running, for example,

To learn more about required roles and privileges, see Grant users access to secured resources.

You can send data to other outputs, such as Logstash, but that requires additional configuration and setup.

Heartbeat provides monitors to check the status of hosts at set intervals. Heartbeat currently provides monitors for ICMP, TCP, and HTTP (see Heartbeat overview for more about these monitors).

You configure each monitor individually. In heartbeat.yml, specify the list of monitors that you want to enable. Each item in the list begins with a dash (-). The following example configures Heartbeat to use three monitors: an icmp monitor, a tcp monitor, and an http monitor.

heartbeat.monitors:

- type: icmp

schedule: '*/5 * * * * * *' 1

hosts: ["myhost"]

id: my-icmp-service

name: My ICMP Service

- type: tcp

schedule: '@every 5s' 2

hosts: ["myhost:12345"]

mode: any 3

id: my-tcp-service

- type: http

schedule: '@every 5s'

urls: ["http://example.net"]

service.name: apm-service-name 4

id: my-http-service

name: My HTTP Service

- The

icmpmonitor is scheduled to run exactly every 5 seconds (10:00:00, 10:00:05, and so on). Thescheduleoption uses a cron-like syntax based on thiscronexprimplementation. - The

tcpmonitor is set to run every 5 seconds from the time when Heartbeat was started. Heartbeat adds the@everykeyword to the syntax provided by thecronexprpackage. - The

modespecifies whether to ping one IP (any) or all resolvable IPs - The

service.namefield can be used to integrate heartbeat with elastic APM via the Uptime UI.

To test your configuration file, change to the directory where the Heartbeat binary is installed, and run Heartbeat in the foreground with the following options specified: ./heartbeat test config -e. Make sure your config files are in the path expected by Heartbeat (see Directory layout), or use the -c flag to specify the path to the config file.

For more information about configuring Heartbeat, also see:

- Configure Heartbeat

- Config file format

heartbeat.reference.yml: This reference configuration file shows all non-deprecated options. You’ll find it in the same location asheartbeat.yml.

Heartbeat can be deployed in multiple locations so that you can detect differences in availability and response times across those locations. Configure the Heartbeat location to allow Kibana to display location-specific information on Uptime maps and perform Uptime anomaly detection based on location.

To configure the location of a Heartbeat instance, modify the add_observer_metadata processor in heartbeat.yml. The following example specifies the geo.name of the add_observer_metadata processor as us-east-1a:

# ============================ Processors ============================

processors:

- add_observer_metadata:

# Optional, but recommended geo settings for the location Heartbeat is running in

geo: 1

# Token describing this location

name: us-east-1a 2

# Lat, Lon "

#location: "37.926868, -78.024902" 3

- Uncomment the

geosetting. - Uncomment

nameand assign the name of the location of the Heartbeat server. - Optionally uncomment

locationand assign the latitude and longitude.

To test your configuration file, change to the directory where the Heartbeat binary is installed, and run Heartbeat in the foreground with the following options specified: ./heartbeat test config -e. Make sure your config files are in the path expected by Heartbeat (see Directory layout), or use the -c flag to specify the path to the config file.

For more information about configuring Heartbeat, also see:

- Configure Heartbeat

- Config file format

heartbeat.reference.yml: This reference configuration file shows all non-deprecated options. You’ll find it in the same location asheartbeat.yml.

Heartbeat comes with predefined assets for parsing, indexing, and visualizing your data. To load these assets:

Make sure the user specified in

heartbeat.ymlis authorized to set up Heartbeat.From the installation directory, run:

heartbeat setup -e

```

heartbeat setup -e

```

./heartbeat setup -e

```

./heartbeat setup -e

```

PS > .\heartbeat.exe setup -e

```

sudo service heartbeat-elastic start

If you use an init.d script to start Heartbeat, you can’t specify command line flags (see Command reference). To specify flags, start Heartbeat in the foreground.

Also see Heartbeat and systemd.

sudo service heartbeat-elastic start

If you use an init.d script to start Heartbeat, you can’t specify command line flags (see Command reference). To specify flags, start Heartbeat in the foreground.

Also see Heartbeat and systemd.

sudo chown root heartbeat.yml 1

sudo ./heartbeat -e

- You’ll be running Heartbeat as root, so you need to change ownership of the configuration file, or run Heartbeat with

--strict.perms=falsespecified. See Config File Ownership and Permissions.

sudo chown root heartbeat.yml 1

sudo ./heartbeat -e

- You’ll be running Heartbeat as root, so you need to change ownership of the configuration file, or run Heartbeat with

--strict.perms=falsespecified. See Config File Ownership and Permissions.

PS C:\Program Files\heartbeat> Start-Service heartbeat

By default, Windows log files are stored in C:\ProgramData\heartbeat\Logs.

Heartbeat is now ready to check the status of your services and send events to your defined output.

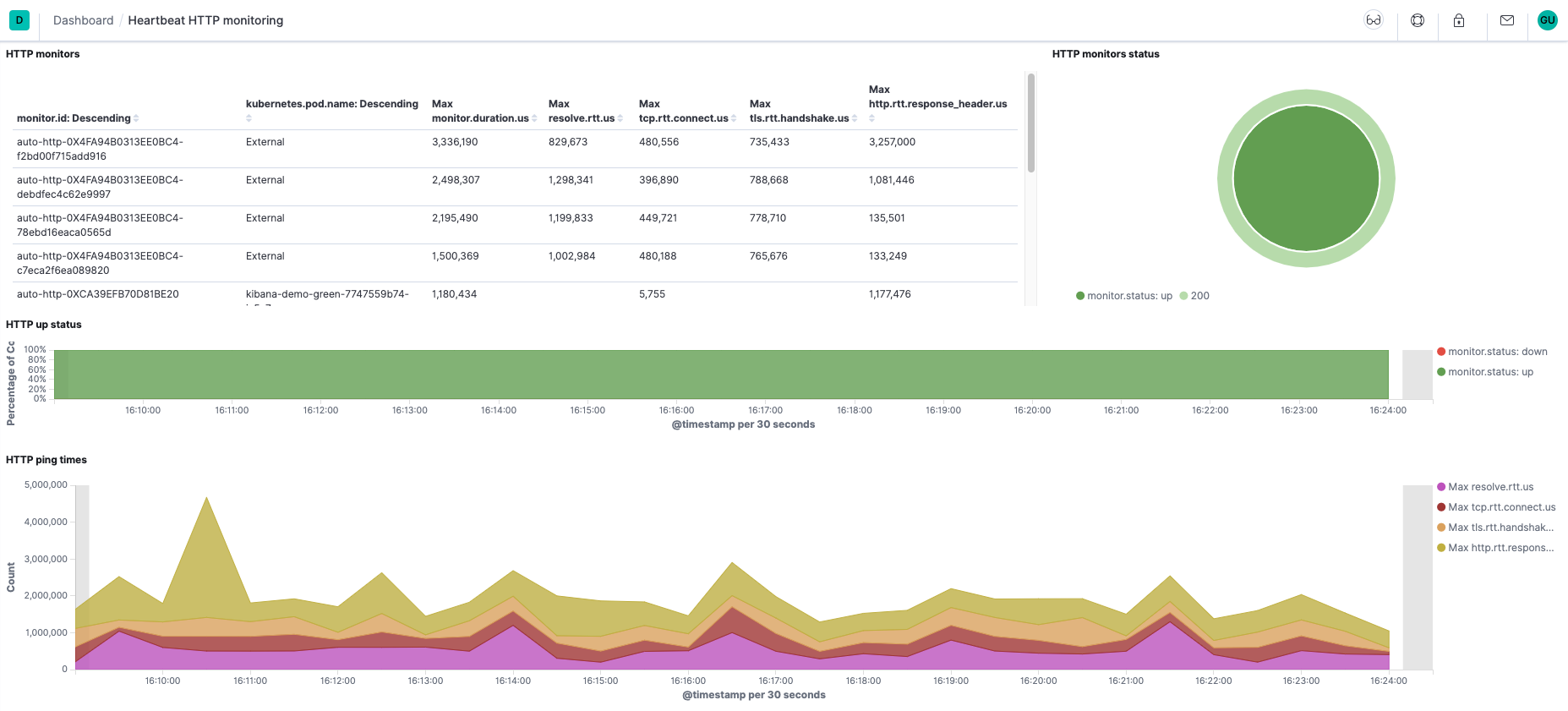

Heartbeat comes with pre-built Kibana dashboards and UIs for visualizing the status of your services. The dashboards are available in the uptime-contrib GitHub repository.

If you loaded the dashboards earlier, open them now.

To open the dashboards:

Launch Kibana:

<div class="tabs" data-tab-group="host">

<div role="tablist" aria-label="Open Kibana">

<button role="tab"

aria-selected="true"

aria-controls="cloud-tab-open-kibana"

id="cloud-open-kibana">

Elasticsearch Service

</button>

<button role="tab"

aria-selected="false"

aria-controls="self-managed-tab-open-kibana"

id="self-managed-open-kibana"

tabindex="-1">

Self-managed

</button>

</div>

<div tabindex="0"

role="tabpanel"

id="cloud-tab-open-kibana"

aria-labelledby="cloud-open-kibana">- Log in to your Elastic Cloud account.

- Navigate to the Kibana endpoint in your deployment.

</div>

<div tabindex="0"

role="tabpanel"

id="self-managed-tab-open-kibana"

aria-labelledby="self-managed-open-kibana"

hidden="">

Point your browser to http://localhost:5601, replacinglocalhostwith the name of the Kibana host.</div>

</div>In the side navigation, click Discover. To see Heartbeat data, make sure the predefined

heartbeat-*data view is selected.TipIf you don’t see data in Kibana, try changing the time filter to a larger range. By default, Kibana shows the last 15 minutes.

In the side navigation, click Dashboard, then select the dashboard that you want to open.

The dashboards are provided as examples. We recommend that you customize them to meet your needs.

Now that you have your uptime data streaming into Elasticsearch, learn how to unify your logs, metrics, uptime, and application performance data.

Ingest data from other sources by installing and configuring other Elastic Beats:

Elastic Beats To capture Metricbeat Infrastructure metrics Filebeat Logs Winlogbeat Windows event logs APM Application performance metrics Auditbeat Audit events Use the Observability apps in Kibana to search across all your data:

Elastic apps Use to Metrics app Explore metrics about systems and services across your ecosystem Logs app Tail related log data in real time Uptime app Monitor availability issues across your apps and services APM app Monitor application performance SIEM app Analyze security events